IIDE Proceedings 2014 – About IT Unemployment: Reflecting On Normative Aspects Of The ‘Broken Link’

The widespread use of information and communication technologies has given rise to some moral challenges that deserve particular attention. One such is the discrepancy between productivity growth and technological unemployment. This paper argues that if subsequent undesirable consequences of technological unemployment are to be avoided, there is a need for additional research to embed normative considerations into a scientific context, by linking technological progress with the ‘Ought to Be’ of the economic and societal order.

1. Introduction

Since the emergence of modernity and industrialism, humans have developed and introduced advanced machines to facilitate their work in various manners. Firstly, machines were created to replace human physical labor and “mechanization” became an integral element of our life. Secondly, “automatization” of human mental capabilities became an objective need to process and manipulate the vast amount of information. The introduction of various sophisticated information communication technologies (ICT) into human, social, business and industrial affairs created several kinds of effects with different degrees of predictability and desirability. For example, among positive ICT opportunities for human development, Sartor (2012) highlights economic development, reduction of administrative costs, access to education and knowledge for everyone, elimination of distance, and moral progress. At the same time, technological development brings the risk of undesired consequences of technology use. Examples of the risks arising from the use of ICT include reduced privacy and increased control over individuals, discrimination and exclusion, ignorance and indifference, separation and loss of communication, class division, war and human distraction, and the undesired replacement of humans with ICT. Although these ICT risks can be seen as nightmares, some of them may soon become reality.

A key risk listed is when ICT replaces human labor which may potentially give rise to unwanted unemployment. Brynjolfsson and McAfee (2011; 2014) elaborate this extensively and raise an informative and provocative discussion by providing recent statistics on the effect of information technology on the level of employment, income distribution inequality, skills, wages and the economy. They identified that even if job creation in the US were to be doubled per month, it would take a few decades to fill the gap in employment opened by the last Great Recession. Moreover, although companies experience profit growth and continually invest in new technologies, the level of hiring people remains unchanged (ibid.).

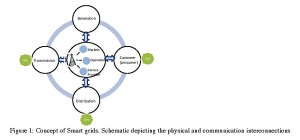

Several decades ago, economic scholarship established a causal link between IT deployment contributing to productivity gains which in turn increase market demand and therefore decrease unemployment (Cesaratto et al., 2003). However, according to recent economic investigations, we are now experiencing, for the first time, early signals of a split of that correlation between productivity growth and the level of unemployment, due to technological advancement (Brynjolfsson and McAfee, 2011). It is projected that the increased use of IT and widespread automation will increase productivity and long-term structural unemployment at the same time (Brynjolfsson and McAfee, 2014). In accordance with the economic forecasts, spending on ICT will reach 5 trillion dollars by 2020 (Barnard, 2013). This is 1.7 trillion dollars more than it is today. At the same time, it is expected that GDP per capita will grow enormously in developed and developing countries. Yet, the effect of technological progress on the level of unemployment generation is anticipated to be tremendous for both developed and developing countries (The Economist, 2014, p. 7).

Although economic theories offer a set of different perspectives on the relationship between productivity growth and technological unemployment (e.g. Postel-Vinay, 2002; Carré and Drouot, 2004; Vivarelli, 2007), it is still difficult to predict more exactly whether IT will give rise to massive unemployment or not, and in such a case how much. We know for sure that more and more job tasks previously conducted by humans are now conducted by machines. Example of such include self-checkout machines at the stores, online banking and mobile applications, automated telephone operators, self-service machines at the airports and terminals, data-driven healthcare, and software that substitutes various job tasks performed by lawyers, journalists and physicians (Autor and Dorn, 2013; Frey and Osborne, 2013). While we can clearly see that such automation reduces the number of workers needed to perform those tasks, we can also see new kind of job tasks and job profiles emerging – for example, someone is needed to design, construct and maintain the listed automations. A critical evaluation of current investigations into the risk of occupations disappearing due to technological progress reveals some challenges and questions; these in turn require more research to understand the phenomenon of IT-induced unemployment, its causes and its effects.

Besides, in a situation when a broken link between productivity growth and technological unemployment will be consistent, we may expect a long-term structural unemployment. In these conditions, economic, political and social systems will need to adapt to the new reality. “ If handled poorly, the widespread displacement of workers by technology could result in rapidly expanding economic divergence between rich and poor, economic poverty and social unrest for growing numbers of dislocated workers, backlashes against technology and social institutions, and economic and social decline.” (Marchant et al., 2014, p. 27). Most of the debates regarding ICT-induced unemployment focus on whether that mechanism is really establishing itself now or not, and if so what the exact consequences will be and what the exact substitution mechanism is, e.g. which job task will be substituted and which not. There is much less debate, however, as to whether IT-generated job-elimination and a resulting massive unemployment is wanted or not? In this text, some suggestions will be developed to address this question.[iii]

The paper is organized as follows. The next section reviews economic theory on the link between productivity growth and technological displacement. This will be followed by a review of current empirical studies on technological displacement. The conclusion made there is that IT has indeed brought a fundamental new feature to the relationship between technological development and social change. The next section reviews moral theories and then discusses contemporary challenges of computer ethics to align technological features and employment opportunities. This paper ends with a discussion where we advocate for taking into account ethical considerations in a scientific context as a necessary requirement to meet future consequences of technological unemployment.

2. Technological Unemployment: Theoretical Prerequisites and Empirical Evidence

This section deals with the economic theories which explore the link between productivity growth and technological unemployment. The purpose of this section is, by synthesizing and evaluating the existing body of research in the domain of the relationship between productivity growth and technological unemployment, to provide the reader with a broad theoretical framework, demonstrate theoretical pluralism and difficulties in reaching one conclusive message on the “broken” link. Further, in this section, an analysis of current investigations on the probability that jobs will disappear is conducted to show a potential risk of technological substitution. A critical evaluation of these studies lays a foundation for the investigation of the normative aspects of the current information society.

2.1 Is Economic Theory Good Enough to explain the “Broken Link” and resolve its consequences?

Since the economic community was the first to detect the threatening tendency of technological unemployment, its opinion deserves our attention in the first place. Since the late 18th century, the concept of “technological unemployment” has been widely deliberated among economic theorists and policymakers to understand the underlying reasons and predict the effect of technological change on the level of unemployment (Postel-Vinay, 2002). According to recent statistics, an increasing level of technological unemployment is a macroeconomic problem worldwide, especially in technologically advanced countries (The Economist, 2014). Social and economic consequences of those tendencies became a reason for a number of studies and calls for conferences, congresses and discussions at global level. Therefore, this section is based on the systematic review of papers that analyze changes in the level of unemployment due to technological advancement from several leading economic journals.

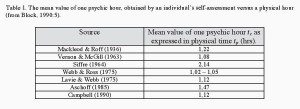

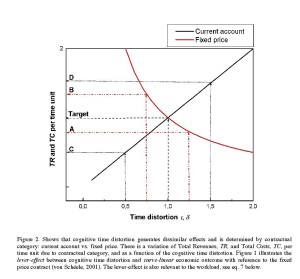

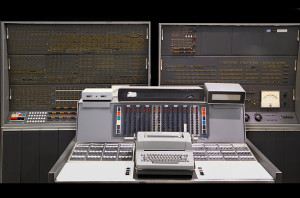

Review of literature demonstrates that the current theoretical regime has a set of different conceptions; yet, two polar perspectives such as equilibrium and disequilibrium have been formulated in economic literature (Appendix 1). Both controversial standpoints can be explained by the differences in views on the nature of the relationships between technological progress and level of employment. For example, neoclassical economists believe that IT progress is always beneficial to employment as markets can work freely and competitively. Supporters of the Ricardian view argue that since automatic compensating factors are generally absent, innovations and IT progress are harmful for employment, and are possible causes of long-term unemployment. Proponents of the Neo-Schumpeterian approach believe that technological unemployment is a transitory phenomenon and a compensation mechanism for employment through effective demand will eventually stabilize the level of employment. Finally, followers of the Keynesian tradition claim that high growth rates of output can, in principle, compensate decreasing labor requirements in the long-run.

In more detail, proponents of the equilibrium perspective believe that while technological progress destroys jobs, new occupations emerge that can employ the released labor force (e.g. Kreickemeier and Nelson, 2006; Michelacci and Lopez-Salido, 2007; Barnichon, 2010). Different arguments have been provided to support the equilibrium perspective. For example, Stadler and Wapler (2004) believe that a general-equilibrium model of endogenous skilled-biased technological change provides a reduction in high-skilled unemployment through reduction of wages and the creation of new positions. The relationship between technological progress, productivity growth and technological unemployment was challenged by Barnichon (2010) and Canova et al. (2013) who emphasize that the conventional way of modeling the technological effect on unemployment does not work, because the market responds differently to neutral and investment-specific shocks. However, eventually, technological and non-technological shocks balance the link between productivity and unemployment.

Despite the seemingly simple explanation of the relationships between productivity growth and the level of employment, the economy of individual countries is more complex and depends on various macroeconomic and microeconomic adjustment mechanisms. Supporters of the disequilibrium perspective refer to statistics and emphasize that it is difficult to predict innovations, extrapolate technology from other macroeconomic effects and that IT progress can lead to a jobless world (e.g. Vivarelli, 2007; Baddeley, 2008; Shahkooh et al., 2008; Pavisou et al., 2011). Although economic theorists develop and test different theoretical hypotheses on the relationships between technological progress, productivity growth and technological unemployment, Brynjolfsson and McAfee (2011) claim that technological innovation sped up too fast and left a lot of workers behind. By searching the reason for the high level of technological unemployment, beside cyclicality and stagnation of the economy, they highlight the threat of the “end of work”. According to the study, technological displacement due to technological progress is observed in all sectors of the economy. The core implication of this displacement is the fact that fewer and fewer workers will be required to produce goods and services which will lead to “near-workerless” world.

Given the above, empirical proof of the link between technological progress and unemployment remains an open question for debate. Some researchers claim that technological progress mostly leads to restructuring of job markets (e.g. Peláez and Kyriakou, 2008; Ott, 2012). Proponents of the technological revolution believe that technological progress stimulates consumer demand through cheaper products. As a consequence, new markets will be created and people will be able to find highly-paid working places in other spheres of employment. So-called fatalists of technological advancement emphasize that while productivity growth is increasing, more and more people become jobless and their leisure is not provided as expected (Aronowitz and DeFazio, 2010; Brynjolfsson and McAfee, 2011). Given these points at the present time, and by applying different theoretical prerequisites, researchers are not able to present a single answer as to whether IT will give rise to massive unemployment or not. Therefore, there is a need to look into empirical data of technological displacement. Below, studies on how technological advancement can transform the structure of employment are reviewed and through critical analysis a set of research questions that require further clarification in order to understand the phenomenon of IT induced unemployment are identified.

2.2 Empirical Evidence on Technological Unemployment and a Need for Further Research

The desire of humans to create some powerful engine, an inexhaustible source of energy or labor-saving machine is understandable. Hard physical or dangerous jobs and repetitive mental work have forced humans to carry out an enormous number of studies of technological development. As computers have extensive machine memory and perform some tasks much faster than humans; engineering, finance, insurance and accounting activities are no longer possible without electronic machines. From the point of view of any company owner, it is reasonable to spend some assets on technologies, rather than employ costly personnel. This can be explained by the fact that: “…machines require no wages or benefits, take no sick days or vacations, provide a consistent, highly reliable quality of work for up to twenty-four hours a day, seven days a week if needed, and incur no injuries…” (Marchant et al., 2014, p. 28). Yet, new technologies are costly and require essential expenditures on their purchase and maintenance. Employees from their side prefer to be competitive on the labor market to ensure long-term employment. Therefore, both sides need knowledge to predict which changes can bring technological progress to the labor market and which professions will be able to survive.

At this time, human society is at a new stage in world history where computer technologies change the specificity of labor and the economy in general (Autor et al., 2003; Goos and Manning, 2007; Frey and Osborne, 2013). Computers become not only complements but also fully substitute some jobs. This fact would not deserve so much attention if computers could only substitute the manual workforce. New computers become more competitive with the human brain in such areas as law, financial and banking services, wholesale, medicine and education (Rotman, 2013). Driverless cars developed by Google (Frey and Osborne, 2013), hospital robots (Bloss, 2011), powerful, intelligent robots with a learning capability and which behave in a manner similar to human beings (Peláez and Kyriakou, 2008) are only a few examples of current technological achievements we are likely to see before too long.

In his book, Nye (2006) reflects upon current changes in the labor market, caused by technology implementation and their consequences on working conditions, technological efficiency and production system. Drawing the line from factor production through Taylorism, Ford’s assembly line, lean and just-in-time production, the author summarizes some principal characteristics of widespread computerization which are similar to the industrialization effect. Among these are unemployment of skilled artisans, monotonous low-wage work for others, high wages for a few mechanics, some new jobs in the hierarchy and the shift to white-collar work. Among those changes due to technology implementation a high level of job elimination is observed. Thus, the question: “…will all the jobs disappear due to computer substitution?” (p. 118), as pointed out by Nye, sounds quite rational.

There is a growing concern among the research community on how the structure of employment will be changed due to computerization. For example, (Goos and Manning, 2007) noticed that there is a growing labor market polarization between high-income cognitive jobs and low-income manual professions. Frey and Osborne (2013) reached a conclusion that among 702 occupations in the US, 47% of current occupations are at risk of disappearing. It is projected that occupations such as transportation and logistics, office and administrative work, production occupations are at great risk of vanishing. By studying the structural shift in the labor market, Autor and Dorn (2013) noticed that by 2050, 80% of activities in the automotive sector, 70% in oil, chemicals, coal, rubber, metal and plastic products, shoe and textile sectors, 60% in security, surveillance and defense sector, 45% in the health care sector and 30% in tourism will be substituted by computers. Brussels European and Global Economic Laboratory identified that over the coming decades, almost 50% of occupations in Sweden, the UK, the Netherlands, France and Denmark will be fully automated. Under the highest risk are such countries as Romania, Portugal, Croatia and Bulgaria, where almost 60% of occupations are expected to be substituted by new technologies.

The statistics on technological displacement developed by current studies are frightening. However, what we have today is only an occupation’s probability of computerization. What we are experiencing now is a lack of knowledge on the dynamics of killing and creating jobs. A set of questions is still unanswered. Namely, we lack precise knowledge about:

* What kinds of jobs have been killed by technological advancement?

* What is the rationale and dynamics of killing jobs?

* What kinds of jobs currently exist?

* What kinds of jobs are subject to being fully or partly substituted by computers and why?

* What kinds of jobs are most probably not subject to substitution by automation in the near future and why?

* What kinds of jobs are created and what are the conditions for their creation?

* Will there be sufficient work opportunities on the labor market for all citizens in the future?

What is clear is that technological progress leads to vast changes in the nature of work, leisure time and the way we consider social issues (Aronowitz and DeFazio, 2010). Therefore, there is a need for an increased understanding of the ongoing trend and underlying mechanisms of technological displacement.

All the above-mentioned studies support the idea that occupations which require involvement of creative and social intelligence, have a chance of surviving on the labor market. Currently, companies more and more seek inventors and creative employees, rather than simple technicians. One of the core ideas widely discussed among policymakers is to equip the next generation of employees with special knowledge and skills to fill in a skill gap in non-routine task performance. Yet, little attention is paid to that fact that humans have different mental abilities. New educational programs exclude some humans from workplaces where high creativity and education are preliminary requirements. Moreover, there are no recommendations where those people can be employed to at least provide their basic needs. Hence, the problem of the effect of technological advancement on the level of employment is complex and requires comprehensive insights. It is not enough to conduct economic and operational research, we also need to include social, political and ethical characteristics into our investigations.

It is well known that the economy cannot function effectively when social tension increases. Undoubtedly, it is impossible to reduce social tension without solving economic problems, especially unemployment. Nowadays, it is widely applicable to introduce employment protection reforms and active labor market programs (Sianesi, 2008). However, these attempts demonstrate that although economists and politicians are aware of the problem they are not well-prepared to respond in a timely fashion to current complex problems of technological unemployment (The Economist, 2014).

The technological paradise has not brought the joy and relief of work as expected, but instead a lot of troubles and worries. When we consider research into technological development, increasing investments in new technologies, efficient use of those technologies, we unconsciously expect higher living standards. However, access to the benefits of technological progress is limited and people are faced with the negative consequences of technological unemployment (Nilsson and Agell, 2003; De Witte, 2005; Eliason and Storrie, 2009). Job instability and wage inequality are constant companions in our life. Some researchers emphasize that the race between technological progress and employment is a never-ending challenge (Pianta, 2005), yet must be acknowledged and addressed as one of the most important factors of social stability. Hence, the question of what recent technological advancement has brought to the labor market, humans and society as a whole is under investigation and requires detailed consideration by different research communities if they are to be able to react to turbulent changes in a timely manner.

3. Questioning Traditional Moral Principles towards the Alignment of New Features and Employment Opportunities of Technological Advancement

The existence of human labor can simply be explained by the provision of basic needs such as food, clothes and accommodation. With the development of living standards and satisfaction of basic needs new motivators such as self-realization and self-actualization have come into play. After some point, the idea that everyone has to be employed became an axiom and is still valid today. New features of the information age, such as easy access to information, the Internet, the digitization of working places, cheap storage, processing and transmission capacity of modern ICT (Schienstock et al., 1999) made their own impact on the nature of labor in general and on the employment structure in particular. Although these features brought new opportunities, they also created challenges to rely on traditional moral concepts (Johnson, 2001). While the shift to the information society has already occurred, social and ethical implications of ICT are still not well established (Bynum and Rogerson, 2004). Therefore, we will discuss in this section theoretical prerequisites of moral principles on human beings and labor, how technological advancement has challenged them and possible ways to modify and re-interpret them in relation to the current situation of technological unemployment.

Have computers brought special moral issues that require development of a new and independent branch of moral philosophy? This question has been addressed by many scientists of both computer science and philosophy. For example Tavani (2001, 2002), taking a middle ground position, mentioned the spectrum of opinions regarding computer ethics. On one side of this spectrum are the so-called traditionalists who believe that the shift to the information age did not bring any new issues about moral norms and rules, and that traditional ethical principles are quite applicable (Adam, 2001). On the other side we find the scientists who claim that computers have brought special and unique aspects that require a new field of research in philosophy (Johnson, 2001; Bynum and Rogerson, 2004; Gorniak-Kocikowska, 2007). Unlike those polar standpoints, Floridi and Sanders (2002) came to the conclusion that although computer ethics issues are not incredibly unique, they challenge standard macro-ethics. Eventually, Bynum (2001) highlights that when computer technologies are widely implemented in our life, computer ethics will be dissolved into ordinary ethics.

Yet, the intention of this paper is not to discuss whether computer ethics have the right to be an independent field of moral philosophy. Its aim is to challenge general moral attitudes in relation to an increasing rate of technological unemployment. Although for some social classes computerization has brought new opportunities and increased income, for others the effect of computerization is the opposite. Which ethics will help us avoid policy and economic vacuums and formulate new social policies in responsible ways to new technological features? It becomes more accepted that new features of technological advancement and new opportunities cannot be supported by a common moral system (Bynum and Rogerson, 2004). Therefore, current moral landscape and the broken link between productivity growth and technological unemployment is exactly an issue that deserves particular attention from the economic, philosophical and IS communities.

The Association of Computing Machinery, the Information Technology Association of America, the Data Processing Management Association, and International Federation on Information Processing are organizations that develop and reconsider codes on computer ethics. Privacy, accuracy, security, reliability, intellectual property are core issues which form the basis of codes of computer ethics with regard to the micro level (Johnson, 2001). The main postulate of these codes from a macro-perspective is that computer technologies are not supposed to produce side effects that harm humans and society. However, current data demonstrates that widespread use of computers has led to technological unemployment. Job insecurity has a set of negative effects on health and well-being (De Witte, 2005). Job losses increase the rate of suicide, alcohol-related mortality (Eliason and Storrie, 2009) and crime (Nilsson and Agell, 2003). Thus, the most negative effect of unemployment is on psychological well-being of humans when people cannot meet their financial obligations; their social position becomes worse, people are insecure in their future. Hence, the question arises: why should people experience such emotional traumas in a society where productivity is growing and living standards are improving?

The EU’s core principles of sustainable peace, social freedom, consensual democracy, associative human rights, and supranational rule of law, inclusive equality, social solidarity, sustainable development and good governance are all based on the pluralist approach of normative moral principles (Manners, 2008). All those traditional norms and laws had been functioning for a long time before the emergence of computers. The computer age brought new entities, features and ways of doing things. The high speed of development and the implementation of new technologies led to a situation where society cannot appropriately react to changing conditions. Moreover, it became difficult to draw on traditional moral systems to avoid policy and economic vacuums.

In a situation of potential risk for the destruction of workplaces by automation and generation of mass unemployment, policymakers have a number of alternatives. One of the unrealistic scenarios could be based on the limitation of automation and keeping available working places. In a more realistic scenario, policymakers can facilitate creation of new kinds of jobs while the older ones are being eliminated. Yet, another scenario can be assumed, such as a new societal order, where citizens are supported with the basic needs by the state. This scenario is presented in more detail below.

New changes in the level of employment and its structure introduced by IT gave rise to questions on the norm and right as to whether everyone needs to work for a living. Although the notion of everyone being employed is rather a new invention of western societies, in general, our society has a predominant market orientation and uses functionalist and instrumental views on humans (O’Donnell and Henriksen, 2002). People are mostly evaluated by their input to society and methods of distribution became unfair (Johnson, 2001, p. 36). “We are now in the middle of a paradigmatic struggle. Challenged is the enriched utilitarian, rationalistic-individualistic and neoclassical paradigm which is applied not merely to the economy, but also, increasingly, to the full array of social relations” (Etzioni, 2010, p. ix). New situations where forthcoming IT may eliminate jobs, create unfamiliar ethical issues. When we are faced with unfamiliar ethical problems we apply analogies known from the past and if that is not possible there is a call to reconsider and discover new moral and ethical values (Manners, 2008). Moreover, when people discuss ethical issues they have very little knowledge about the underlying reasons of why specific behavior is wrong or unfair Johnson (2001). Thus, we have to come to a common understanding on what we actually want from technological progress.

An assumption that the effective economic system may lead to global prosperity and equality failed during the last Great Depression in 2008. Global inequality becomes a real problem. Therefore, global ICT ethics have to focus on the relationships “… between the weak and the strong, the rich and the poor, the healthy and the sick worldwide – and it should explore the ethical problems from the point of view of both parties involved” (Gorniak-Kocikowska 2007, p. 56). We have to accept that human life has the highest value despite its contribution to society. One of the potential practical solutions to the problem of technological unemployment could be the widespread introduction of basic security income as a basic human right (Van Parijs, 2004; Standig, 2005), so people can feel equally secure and still have purchase capability. Of course, this requires some knowledge of how to introduce this system and not destroy the intrinsic motivation of people to express their creativity in a socially useful form. Yet, some empirical evidence demonstrates that people want to contribute in a positive way to increase their minimal income. Surely, the process of reconsideration, modification and re-interpretation of moral principles is long and requires active participation of the whole global community (Bynum and Rogerson, 2004). Yet, while we will not challenge them, the global economy will continue to deteriorate, people will suffer from the lack of working places and tension in society will grow.

It is presumed that technological advancement will further transform the structure of employment and, most probably, the downward tendency of available working places will be checked. However, this seemingly horrifying tendency may be approached from a recognition that decreasing employment level is not only a matter of what IT is capable of doing or not. It is rather a matter of what society wants to happen. Do we want to keep people busy working, or do we want to free them up from the need to work and let them enjoy improved living standards due to technological advancement? More and more academics emphasize the need to take into account a human position as the basic and the most valuable unit of analysis to align technological features and new technological possibilities. These new ethical and social issues caused by IT development force us to search for new solutions from the core normative ethical premises on what is “right” and “wrong” for humans in the information society.

4. Concluding Remarks and Some Thoughts about the Near Future

Despite the destructive potential and risks of technological unemployment such as social tension and differentiation, physical and mental illness and the growing level of crime, we cannot neglect some positive consequences such as totally new occupations, competition, and reconsidered value of labor and leisure. When we refer to technological advancement, we have to take into account the value of technologies which substituted people in heavy, dirty and dangerous work (Carro Fernandez et al., 2012). Many lives were saved through health information technologies. Telecommuting became one of the main factors of lower work-family conflicts and higher job satisfaction through flexible work arrangements (Severin and Glaser, 2009). Increased speed of communication allowed us to take place over distances and make decisions much faster. In relation to this, we can already admire some positive effects of computerization. Yet, according to the economic forecast, the number of workplaces will decrease enormously for both routine and non-routine work, and new workplaces will not be able to absorb all the unemployed. Therefore, the questions arise: what changes do we expect in the level of employment in the near future and how can we cope with them? Some key thoughts in favor of current changes brought about by technological advancement in the sphere of employment will be found below. The text ends with suggestions for further research about the interplay between computerization, productivity growth, technological unemployment and the societal consequences.

Fourthly, a few attempts have been made towards stabilizing the level of unemployment in advanced economies. For example, some countries such as France and Switzerland decreased working hours and, thus, tried to share the work among employees, yet keeping the same income as before (Van den Besselaar, 1997). Interestingly, the results of this practice were neither good nor bad. (Rifkin, 2001). Another promising attempt is the introduction of a basic secure income. This practice is intended to provide a reasonable income for everyone to satisfy basic needs. Eventually, it is expected that this practice will enforce humans to develop their capabilities and competencies in a society of rising prosperity. Yet, this will require reconsideration of other policies. Will human society be able to create a stable global society where man and machine can coexist with each other, to provide everyone with beneficial results? Undoubtedly, different scenarios of the future can be conceived and discussed. The stake here is no less if we wish to establish societies where social inequality grows and brings social unrest or if we seek societies where the highest value is human life and equal rights and opportunities to everyone.

Fifthly, and finally, technology is a part of social evolution (Nye, 2006), yet it forces us to “…reconstruct our environment and to reconsider the ethical foundations of techno-economic decisions…” (Peláez and Kyriakou, 2008, p. 1192). Both blandness of human thinking and desire for profit from individuals who have access to technological advancement make the process of moral principles re-consideration difficult. Instead of competing with machines, we have to accept a future of prolonged education, early retirement and free time. Yet, it will take time to establish acceptance for a desire for an integrative and harmonious society, where humans and machines can complement each other. Probably, together with a search for the reasons for technological unemployment and what the underlying economic theory is, we should focus on the human position in socioeconomic relationships and challenge normative assumptions of our expectations of technological progress. Brynjolfsson and McAfee (2014) claim that digitization of society will force us to reinvent social and economic life. Furthermore, a new information age will change our consciousness about technological, societal and economic issues.Given the above, we have to recognize that if we do not act now, but wait for years to see what the actual outcome of the present technology induced transformation will lead to, we may find us in societal and economic conditions that are highly undesirable; and it may be too late to address it then. Therefore, in order for the policymakers to make informed decisions there is a need to conduct investigations aimed to provide us with additional understanding of the underlying mechanism of the ongoing tendency. At the same time, we have to recognize that economic and societal mechanisms of technology adoption, productivity gains and unemployment are not governed by isolated deterministic laws, which implies that it is not enough to understand the ongoing tendency. It is also necessary to acknowledge what kind of economic and societal features are desirable with regard to moral considerations. Based on this knowledge, we may be able to develop future scenarios to bridge the current situation to what we desire from technological progress.

NOTES

i. Natallia Pashkevich – Accounting Department, Stockholm Business School, Stockholm University, 106 91 Stockholm, Sweden, npa@sbs.su.se

ii. Darek M. Haftor – School of Technology, Linnaeus University, 351 95 Växjö, Sweden, darek.haftor@lnu.se

iii. This elaboration explicitly assumes a relationship between science, ethics and society, as justified by Nowotny et al. (2001), who noticed an increasing orientation of science systems towards the production of knowledge that is socially distributed and highly interactive. It is acknowledged by scientists that contemporary scientific practice has to be oriented towards research which satisfies the requirements, needs and goals of society (Hessels and Van Lente, 2008). As technology development strongly depends on science (Munoz, 2004), we assume that both have to serve the well-being of humankind.

REFERENCES

Adam, A. (2001). Computer ethics in a different voice. Information and Organization, 11(4), 235-261.

Alexopoulos, M. (2003). Growth and unemployment in a shirking efficiency wage model. The Canadian Journal of Economics / Revue canadienne d’Economique, 36(3), 728-746.

Arkes, H., Shaffer, V. A. & Medow, M. A. (2007). Patients derogate physicians who use a computer-assisted diagnostic aid. Medical Decision Making, 27, 189–202.

Aronowitz, S. & DeFazio, W. (2010). The Jobless Future. University of Minnesota Press, Minneapolis, MN.

Autor, D. & Dorn, D. (2013). The growth of low skill service jobs and the polarization of the labor market. American Economic Review, 103(5), 1553-1597.

Autor, D. H., Levy, F. & Murnane, R.J. (2003). The skill content of recent technological change: an empirical exploration. Quarterly Journal of Economics, 118(4), 1279-1333.

Baddeley, M.C. (2008). Structural shifts in UK unemployment 1979–2005: The twin impacts of financial deregulation and computerization. Bulletin of Economic Research, 60(2), 123-157.

Barnard, C. (2013). The changing ICT world and services transformation. Annual Review of European Telecommunications and Networking, IDC EMEA, 16-17.

Barnichon, R. (2010). Productivity and unemployment over the business cycle. Journal of Monetary Economics, 57, 1013-1025.

Bauer, T.K. & Bender, S. (2004). Technological change, organizational change, and job turnover. Labor Economics, 11(3), 265-291.

Bloss, R. (2011). Mobile hospital robots cure numerous logistics needs. Industrial Robot: An International Journal, 38(6), 567-571.

Brynjolfsson, E. & McAfee, A. (2011). Race against the Machine: How the Digital Revolution is Accelerating Innovation, Driving Productivity, and Irreversibly Transforming Employment and The Economy. Digital Frontier Press.

Brynjolfsson, E. & McAfee, A. (2014). The Second Machine Age: Work, Progress, and Prosperity in a Time of Brilliant Technologies. WW Norton & Company.

Bynum, T.W. & Rogerson, S. (2004). Computer Ethics and Professional Responsibility. Blackwell Published Ltd.

Bynum, T.W. (2001). Computer ethics: its birth and its future. Ethics and Information Technology, 3, 109-112.

Canova, F., Lopez-Salido, D. & Michelacci, C. (2013). The ins and outs of unemployment: an analysis conditional on technology shocks. Economic Journal, 123(569), 515-539.

Carre, M. & Drouot, D. (2004). Pace versus type: the effect of economic growth on unemployment and wage patterns. Review of Economics Dynamics, 7(3), 737-757.

Carro Fernandez, G., Gutierrez, S.M., Ruiz, E.S., Mur Perez, F. & Castro Gil, M. (2012). Robotics, the new industrial revolution. IEEE Technology and Society Magazine, 2, 51-58.

Cesaratto, S., Serrano, F. & Stirati, A. (2003). Technical change, effective demand and employment. Review of Political Economy, 5(1), 33-52.

De Witte, H. (2005). Job insecurity: review of the international literature on definitions, prevalence, antecedents and consequences. Journal of Industrial Psychology, 31(4), 1-6.

Eliason, M. & Storrie, D. (2009). Does job loss shorten life? Journal of Human Resources, 44(2), 277-302.

Etzioni, A. (2010). The Moral Dimension: Toward a New Economics. New York: The Free Press.

Fernandez-de-Cordoba, G. & Moreno-Garcıa, E. (2006). Union games: technological unemployment, Economic Theory, 27, 359–373.

Floridi, L., & Sanders, J. W. (2002). Mapping the foundationalist debate in computer ethics. Ethics and information Technology, 4(1), 1-9.

Frey, C. B. & Osborne, M.A. (2013). The future of employment: how susceptible are jobs to computerization? Available at: http://assets.careerspot.com.au/files/news/The_Future_of_Employment_OMS_Working_Paper_1.pdf

Goos, M. & Manning, A. (2007). Lousy and lovely jobs: the rising polarization of work in Britain. The Review of Economics and Statistics, 89(1), 118-133.

Gorniak-Kocikowska, K. (2007). From computer ethics to the ethics of global ICT society. Ethics of Global ICT Society, 25(1), 47-57.

Hessels, L. K. & Van Lente, H. (2008). Re-thinking new knowledge production: a literature review and a research agenda. Research Policy, 37(4), 740-760.

Johnson, D.G. (2001). Computer Ethics, 3d ed., Printice-Hall, Upper Saddle River, New Jersey.

Kreickemeier, U. & Nelson, D. (2006). Fair wages, unemployment and technological change in a global economy. Journal of International Economics, 70, 451-469.

Levy, F. & Murnane, R. (2004). The New Division of Labor: How Computers Are Creating the Next Job Market. Princeton University Press.

Manners, I. A. (2008). The normative ethics of the European Union. International Affairs, 84(1), 45-60.

Manyika, J., Chui, M., Brown, B., Bughin, J., Dobbs, R., Roxburgh, C. & Byers, A. H. (2011). Big data: the next frontier for innovation, competition, and productivity, McKinsey Global Institute. Available at: http://www.mckinsey.com/insights/mgi/research/technology_and_innovation/big_data_the_next_frontier_for_innovation.

Marchant, G.E., Stevens, Y.A. & Hennessy, J.M. (2014). Technology, unemployment and policy options: navigating the transition to a better world. Journal of Evaluation and Technology, 1, 26-44.

Merlino, L.P. (2010). Unemployment Responses to Skill-Biased Technological Change: Directed versus Undirected Search. Mimeo.

Michelacci, C. & Lopez-Salido, D. (2007). Technology shocks and job flows. Review of Economic Studies, 74, 1195-1227.

Moore, M.P. & Ranjan, P. (2005). Globalisation vs skill-biased technological change: implications for unemployment and wage inequality. The Economic Journal, 115(503), 391-422.

Munoz, J.M.V. (2004). Which ethics will survive in our technological society? IEEE Technology and Society Magazine, 1, 36-39.

Murray E, Burns J, May C, Finch T, O’Donnell C, Wallace P. & Mair F. (2011). Why is it difficult to implement e-health initiatives? A qualitative study. Implementation Science, 6(6), 1-11.

Nilsson, A. & Agell, J. (2003). Crime, unemployment and labor market pro-grams in turbulent times, Working paper №14, IFAU, Uppsala.

Nowotny, H., Scott, P. & Gibbons, M. (2001). Re-thinking Science: Knowledge and the Public in an Age of Uncertainty, Cambridge: Polity Press.

Nye, D. E. (2006). Technology Matters: Questions to Live with (pp. 194-198). Cambridge, MA: MIT Press.

O’Donnell, D. & Henriksen, L.B. (2002). Philosophical foundations for a critical evaluation of the social impact of ICT. Journal of Information Technology, 17, 89-99.

Ott, I. (2012). Service robotics: an emergent technology field at the interface between industry and services. International Journal of Ethics of Science and Technology Assessment, 9(3-4), 212-229.

Pavisou, N-E., Tsaliki, P.V. & Vardalachakis, I. N. (2011). Technical Change, unemployment and labor skills. International Journal of Social Economics, 38(7), 595-606.

Peláez, A.L. & Kyriakou, D. (2008). Robots, Genes and bytes: technology development and social changes towards the year 2020. Technological Forecasting and Social Change, 75(8), 1176–1201.

Pianta, M. (2005). Innovation and Employment, Chapter 22 in Fagerberg, J., D.C. Mowery and R.R. Nelson (eds.): The Oxford Handbook of Innovation, Oxford University Press, Oxford, New York 2005.

Pierrard, O. & Sneessen, H. (2003). Low-skilled unemployment, biased technological shocks and job competition, IZA Discussion Paper 784, Institute for the Study of Labor.

Postel-Vinay, F. (2002). The dynamics of technological unemployment. International Economic Review, 43(3), 737–760.

Prat, J. (2007). The impact of disembodied technological progress on unemployment. Review of Economic Dynamics, 10(1), 106-125.

Rifkin, J. (2001). The Age of Access: The New Culture of Hypercapitalism, Where all of Life is a Paid-For Experience, New York: J.P. Tarcher/Putnam.

Riley, R. & Young, G. (2007). Skill heterogeneity and equilibrium unemployment. Oxford Economic Papers, 59, 702-725.

Rotman, D. (2013). How technology is destroying jobs. MIT Technology Review.

Sarget, T.C. (2000). Structural unemployment and technological change in Canada 1990-1999. Canadian Public Policy, 26, S109-S123.

Sartor, G. (2012). Human Rights in the Information Society: Utopias, Dystopias and Human Values. Chapter 15 in Philosophical Dimensions of Human Rights: Some Contemporary Views. Ed by Corradetti, C. Springer Dordrecht Heidelberg, London, New York.

Schienstock, G.; Bechmann, G. & Frederichs, G. (1999). Information Society, Work and the Generation of New Forms of Social Exclusion (SOWING) – the Theoretical Approach. TADatenbank-Nachrichten, NR 1, 8. Jg., März 1999.

Severin, H. & Glaser, J. (2009). Home-based telecommuting and quality of life: further evidence on an employee-oriented human resource practice. Psychological Reports, 104(2), 811-821.

Shahkooh, K.A., Azadnia, M. & Shahkooh, S.A. (2008). An investigation into the effect of information technology on the rate of unemployment. Proceedings of Third 2008 International Conference on Convergence and Hybrid Information Technology, 61-65.

Sianesi, B. (2008). Differential effects of active labor market programs for the unemployed. Labor Economics, 15(3), 370-399.

Soete, L. (2001). ICTs, knowledge work and employment: the challenges to Europe. International Labor Review, 140(2), 143-163.

Stadler, M., & Wapler, R. (2004). Endogenous skilled-biased technological change and matching unemployment. Journal of Economics, 81(1), 1-24.

Standing, G. (2005). Why basic income is needed for a right to work. Rutgers Journal of Law and Urban Policy, 2(1), 91-102.

Tavani, H. T. (2001). The state of computer ethics as a philosophical field of inquiry: some contemporary perspectives, future projections, and current resources. Ethics and Information Technology, 3(2), 97-108.

Tavani, H. T. (2002). The uniqueness debate in computer ethics: what exactly is at issue, and why does it matter? Ethics and Information Technology, 4(1), 37-54.

The Economist. (2014). Coming to an office near you, January 18, 7-8.

Van den Besselaar, P. (1997). The future of employment in the information society: a comparative, longitudinal and multi-level study. Journal of Information Science, 23(5), 373-392.

Van Parijs, P. (2004). Basic income: a simple and powerful idea for the twenty-first century. Politics and Society, 32(1), 7-39.

Vivarelli, M. (2007). Innovation and employment: a survey. IZA Discussion Paper No. 2621.

Weiss, M. & Garloff, A. (2011). Skill-biased technological change and endogenous benefits: the dynamics of unemployment and wage inequality. Applied Economics, 43(7), 811-821.

![Appendix 1 [3]](http://rozenbergquarterly.com/wp-content/uploads/2015/01/120114IIDEProc-page-091-219x300.jpg)

![Appendix 1 [d]](http://rozenbergquarterly.com/wp-content/uploads/2015/01/120114IIDEProc-page-092-300x156.jpg)