ISSA Proceedings 2010 – Arguing Towards Truth: The Case Of The Periodic Table

1. Preliminaries

1. Preliminaries

For over a decade I have been presenting papers that include a theory of emerging truth that I feel is contribution towards understanding the relation of substantive arguments to their evaluation (Weinstein, 2009, 2007, 2006, 2006a, 2002, 1999). Substantive arguments address crucial issues of concern and so, invariably in the modern context, rely on the fruits of inquiry for their substance. This raises deep epistemological issues; for inquiry is ultimately evaluated on its epistemological adequacy and basic epistemological concepts are none to easy to exemplify in the musings of human beings. The traditional poles are knowledge and belief; in modern argumentation theory this is reflected in the distinction been acceptance and truth (Johnson, 2000). Crudely put, the rhetorical concern of acceptance is contrasted to the logical concern for truth with acceptability being a bridge between them in much of informal logic and argumentation theory.

It seems to me that the legacy of formal logic, embedded without much notice, in much of informal logic and argumentation theory creates a problem for an account of the logic of substantive inquiry and a muscular identification of acceptability with truth. The root problem is the model of argument as premise conclusion relations and argumentation seen as a series of such. In a recursive model, so natural in formal systems, evaluation works from the bottom up, in the standard case, by assigning truth to propositions. But ascertaining the truth of elements, except in relatively trivial circumstances, points away from the particulars and towards the context. This is particularly true of inquiry, and so is essentially true of substantive arguments that rely on the fruits of inquiry. For if we take the best of the fruits of inquiry available we find that truth of elements, although frequently a pressing local issue, is rarely the issue that ultimately drives the inquiry. Truth of elements is superseded by what one might call, network concerns. And it is upon network relations that an adequate notion of truth in inquiry can be constructed. My ultimate goal is to defend a model of emerging truth as a bridge between acceptability and truth. That is, to indicate a logical structure for acceptability that, at the limit, is as true as we can ever hope for. In this paper I want to show that the model of emerging truth captures the large structure of the inquiry that supports the acceptance of the Periodic Table, about as true a thing as we can expect.

My model of emerging truth (abbreviated in the technical appendix) relies on three intuitive network principles, concilience that is the increasing adequacy of empirical description over time, breadth, the scope of a set of theoretic constructs in application to a range of empirical descriptions and depth, a measure of levels of theoretic redefinitions each one of which results in increasing breadth and higher levels of concilience.

A theory of truth that relies on the satisfaction of these three constraints creates immediate problems if we are to accept standard logical relations. The most pressing within inquiry is the relation of a generalization and its consequences to counter examples. Without going into detail here, the model of truth supports a principled description of the relation of counter-examples to warranted claims that permits a comparative evaluation to be made rather than a forced rejection of one or the other as in the standard account (technical appendix, Part II). Such a radical departure from standard logic requires strong support and although my theory of truth offers a theoretic framework, without a clear empirical model my views are easily overlooked as fanciful.

2. Why the periodic table?

If you ask any sane relatively well-educated person what the world is really made of, the response is likely to be something about atoms for molecules. Why is this so? Why is the prevailing ontology of the age based on modern physical science? What prompted this ontological revision away from ordinary objects as primary and to the exclusion of the host of alternative culturally embedded views especially those supported by religion and a variety of traditional explanatory frameworks, whether lumped together as folklore or more positively as common sense? The obvious explanation is the growing conviction that science yields truth.

There is no doubt that the shift is a result of the amazing practical advances of the last few centuries, the entire range of scientific marvels put at our disposal, from cyclotrons, to computers, from the amazing results of material science and the creation of synthetics to the understanding of the very stuff of genetic coding in the cell. It seem equally obvious to me that the one object that anchors this enormous array of understanding and accomplishment is the Periodic Table of Elements.

The concern for truth that disputation reflected as the Periodic Table advanced dialectically in light of changing evidence and competing theoretic visions mirrors the three main considerations that form the standard accounts of truth in the philosophical literature. The over-arching consideration is the immediate pragmatic advantage in terms of the goals of inquiry, that is an increasing empirical adequacy and the depth of cogency of theoretic understanding. These pragmatic considerations, along practical effectiveness in relation to applications of inquiry in engineering and other scientific endeavors, point to the major epistemological considerations that practical success reflects, that is, higher conformity to expectations, empirical adequacy, the basic metaphor for correspondence in the standard theory of truth, and increasing inferential adequacy and computational accuracy, coherence in the standard account. The relation between these and my trio, concilience, breadth and depth, can only be hinted at in the abbreviated version. Roughly, each of my three contributes in a different way to the standard three. But I hold my account liable to these standard desiderata as well as to the demand of descriptive adequacy. So if my theory of truth in inquiry is adequate, it must be proved against the Periodic Table.

My original conviction was based on a rather informal reading of Chemistry and its history. Despite the relative superficiality of my engagement, it seemed apparent that the salient aspects of truth that my model identified were readily seen within the history of chemical advancement and its gradual uncovering of the keystone around which the explanatory framework of physical science was to be built. It was not until recently that I was able to test my intuition against an available and expert account of the development of the Periodic Table. Such an account now exists in the thoughtful and well-researched philosophical history of the table by philosopher and historian of chemistry Erik Scerri (2007). I rely heavily on his account for specifics.

But first, a brief comment about arguments. It seems safe to say that for scientifically oriented argumentation theorists exploring the literature still available in actual records of argumentation among the Chemists involved would be fascinating. Eavesdropping on their discussions would even be more fascinating for those who see the study of argumentation as involving rhetorical details and actual argumentative exchanges between interlocutors. Such an approach is natural within conceptions of argument seen as debates and dialogue games. But in inquiry, so it seems to me the perspective needs to be broader than ‘persuasion dialogues.’ An alternative looks at argumentation in the large, that is, seeing how the dispute evolves around the key poles that drive the actual developing positions in response to the activities, both verbal and material, of the discussants. Such a perspective in the theory of argument permits a more logical turn, exposing the shifting epistemological structure that undergirds the dialogue in so far as it is reasonable. It enables the epistemological core to be seen. For in this larger sense the rationality of the enterprise can be seen not merely in terms of individuals and their beliefs, but in the gradual exposure of the warrants underlying the points at issue. In what follows I will indicate the participants as points of reference for those who might want to see to what extent the actual dialogues among chemists reflect the epistemological warrants. Scerri in has marvelous and detailed account presents the details of the competing positions and their shifts as the evidence and theories change. My purpose here is to identify the large epistemological structures that, in so far as I am correct, ultimately warrant the present consensus.

3. The Periodic Table

The first realization that sets the stage for a renegotiation of the theory of truth is that there is no clear candidate for what the Periodic Table of Elements is. That is not to say that the choices are random or wide spread, but rather that even after more than a century, the debate as to the most adequate format for the Periodic Table of Elements is ongoing (among other things, the placement of the rare earths remains a point of contention, pp. 21-24). For now and for the foreseeable future both the organization and details of the Periodic Table are open to revision in light of the ends for which it is constructed. To account for this we require some details.

The work of John Dalton at the beginning of the 19th century is a convenient starting place for the discussion of the Periodic Table since he postulated that ‘the weights of atoms would serve as a kind of bridge between the realm of microscopic unobservable atoms and the world of observable properties’ (p. 34). This was no purely metaphysical position, but rather reflected the revolution in Chemistry that included two key ideas. Lavoisier took weighing residual elements after chemical decomposition as the primary source of data and Dalton maintained that such decomposition resulted in identifiable atoms. This was Dalton’s reconstitution of the ancient idea of elements, now transformed from ordinary substances to elements that were the result of chemical decomposition. Studies of a range of gases, by 1805, yielded a table of atomic and molecular weights that supported the ‘long recognized law of constant proportions…when any two elements combine together, for example, hydrogen and oxygen, they always do so in a constant ratio of their masses (pp. 35-36). Scerri epitomizes this period, begun as early as the last decade of the 18th century by Benjamin Richter who published a table of equivalent weights, as that of finding meaningful quantitative relationships among the elements. A period that yielded both the possibility of precision and opened theoretic descriptions to all of the vagaries of empirical measurements: open to the full problematic of weakly supported theories, new and developing procedures of measurement, and the complex nature of the measurement process itself, measures that were open to change and refinement as techniques were improved and experimenters gained more experience.

In hindsight many the problems that confronted the chemists reflected a conceptual issue expressed in empirical incongruities: atomic weight is not invariably reflected in equivalent weight and so the underlying structure was not readily ascertained by finding equivalent weights, the core empirical tool. For without knowing the correct chemical formula, there is no way to coordinate the correct proportions against the observed measurements of the weight of component elements in ordinary occurring chemical compounds. And as it turns out , “the question of finding the right formula for compounds was only conclusively resolved a good deal later when the concept of valency, the combining power of particular elements was clarified by chemists in the decade that followed by Edward Frankland and Auguste Kekule working separately’ (p. 37).

The initial problems, including Dalton’s infamous mistaken formula for water, were the result of empirical incongruities seen in light of a core integrating hypothesis: the law of definite proportion by volume, expressed in 1809 by Guy Lusac as: ‘The volume of gases entering into a chemical reaction and the gaseous products are in a ratio of small integers’ (p. 37). Held as almost a regulative principle the law was confronted with countless counterexamples, recalcitrant, yet often roughly accurate, measurements the reflected the lack of knowledge of the time. A common occurrence throughout the history of science, early chemistry reflects the competing pull of empirical adequacy and theoretic clarity. Not one to the exclusion of the other, but both in an uneasy balance. This reflected many disputes but the one that reflects the deepest thread that runs through the history of the Table is Prout’s Hypothesis. Scerri identifies the key insight: the rather remarkable fact that ‘many of the equivalent weights and atomic weights appeared to be approximately whole number multiples of the weights of hydrogen’ (p. 38). This was based on the increasing numbers of tables of atomic weights available in the first decades of the 19th century. But it was not merely increasing data that drove the science. The two poles, not surprisingly, were the attempts to offer empirically adequate descriptions that demonstrate sufficient structural integrity in light of underlying theoretic assumptions exemplified in the law of definite proportions. Prout’s hypothesis, that elements are composed of hydrogen, first indicated in an anonymous publication in 1819 offered a deeply unifying insight, if everything was composed of one element the law of definite proportions was an immediate corollary. The bold hypothesis was based on ‘rounding off’ empirical values of the of the comparative weights of elements as an index of the atomic weights, to whole number multiples of 1, the presumed atomic weight of hydrogen. Available data created roadblocks. In 1825, the noted chemist Jacob Berzelius ‘compiled a set of improved atomic weights the disproved Prout’s hypothesis (p. 40). Prout’s hypothesis, however, whatever its empirical difficulties ‘proved to be very fruitful because it encouraged the determination of accurate atomic weights by numerous chemists who were trying to either confirm or refute it’ (p.42)

But there was more to the story. Quantitative relationships have an essential yield beyond the increased ability to offer precise descriptions that may be subjected to increasingly stringent empirical testing. That is, they open themselves to structural interpretations. Available data quickly afforded systematization as a prelude to eventual theoretic adequacy. The first effort to systematize known empirical results can be attributed to the German chemist Johann Dobreiner who in 1817 constructed triples of elements which showed chemical similarities and most essentially showed ‘an important numerical relationship, namely, that the equivalent weight, or atomic weight ‘of the middle is the approximate mean of the values of the two flanking elements in the triad’ (p. 42). This moved the focus from constructing tables of atomic weights to looking more closely at the relationships among known values. It led to an initial structural unification of the table of elements through the identification of more triad, triples of elements that show clear ratios between their equivalent weights and therefore their presumed atomic weights. Other chemists, notably Max Pettenkofer and Peter Kremers, worked with similar constructions, which culminated in Ernst Lensser fitting all 58 known elements into a structure of 20 triads. But the problem of ascertaining atomic weights still resulted in competing values and contrasting constructions. By 1843 a precursor to the periodic table was published by Leopold Gmelin, a system that combined some 53 elements in an array that reflected the chemical and mathematical properties, accurately organized most known elements in groups that would later be reflected the underlying principles in the periodic table.

Scerri concludes. ‘It is rather surprising that both Prout’s hypothesis and the notion of triads are essentially correct and appeared problematic only because the early researchers were working with the wrong data’ (p. 61). Prout is, of course, correct in seeing hydrogen as the basis the elements, since hydrogen with one proton serves as the basis as we move across the Periodic Table, each element adding protons in whole number ratios based on hydrogen with one proton. The number of protons yielding the final organizational principle of the table, once atomic number, distinguished from atomic weight which includes the contribution from neutrons unknown until the mid-20th century. And similarly for earlier structural models based on triads. It was only after the famous hypothesis of Amadeo Avogadro of 1811 was championed by Stanislao Cannizzaro in the midcentury that chemists had a firm enough footing to develop increasingly adequate measurements of atomic weight and began to see the shape of the underlying relationships.

The increase in triads is an example of the most basic of the requirements for sustaining a generalization against counterexamples. The empirical evidence, its models, form a model chain, technically, there is a function that maps the hypothesis onto a set of models (or near models) and the model chain is progressive, that is, the set of models in increasing over time (technical appendix, Part I, 1.1). The dialectical force of counterexamples, rather than requiring rejection of either pair requires an adjudication of the power of the counterexample against the weight of the model chain that it confutes. That is not to reject the counterexample, rather to moderate its dialectical force (technical appendix, Part II). This requires a number of assumptions about the models. The first is the assumption that models can be ordered, and the second that approximation relationships can be defined that support the ordering. The latter is crucial, approximation relations (technically neighborhood relations on a field of sets) enable complex relationships among evidence of all sorts to be defined. Intuitively, approximation relations are afforded indices of the goodness of fit between the evidence and the model in respect to the terms and relationships expressed in a generalization. This has a deep affinity to the notion of acceptability in argument theory, since how narrowly the acceptable approximations need to be is determined a posteriori in light of the practice in the field. This is subject to debate but is no mere sociological construct, since there is an additional requirement. The model chain must prove to be progressive, that is the chain of models must be increasing and be an increasingly better approximations over time (technical appendix, Part I, 1.2).

This is evident in the history of the Periodic Table. By the 1860’s the discovery of triads had moved further into the beginnings of the periodic system. By the 1880’s a number of individuals could be credited with beginning a systematization of the elements. Scerri, in addition to Dimitri Mendeleev and Julius Lothar Meyer, credits Alexendre De Chancourtois and John Newlands, William Odling and Gustavus Hinrichs.

Systematization was made possible by the improved methods for determining atomic weights by, among others, Stanislao Cannizzaro and a clear distinction between molecular and atomic weight. As Scerri puts it ‘the relative weight of the known elements could be compare in a reliable manner, although a number of these values were still incorrect and would be corrected only by the discovery of the periodic system’ (p. 67). Systematization was supported by the discovery of a number of new elements that fit within the preliminary organizing structures and the focus was moved towards experimental outcomes without much concern for the theoretic pressure of Prout’s hypothesis which fell out of favor as an organizing principle as the idea of simple arithmetic relationships among the elements proved harder to sustain in the light of growing body of empirical evidence.

From the point of view of my construction what was persuasive was the availability of model chains that in and of themselves were progressive (technical appendix, Part I, 1.3). That is, series of models could be connected though approximation relations despite the lack of an underlying and unifying hypotheses. And whatever the details of goodness of fit, the structure itself took precedence over both deep theory (Prout’s hypothesis) in the name of network of models connected by reasonably clear if evolving, quantitative and chemical relationships.

The hasty rejection of Prout’s hypothesis at this juncture, despite its role as encapsulating the fundamental intuition behind the search of quantitative relationships, offers window into what a theory of emerging truth requires. In the standard model of, for example, Karl Popper, counterexamples force the rejection of the underlying hypothesis. But as often, the counterexample is accepted, but the hypothesis persists, continuing as the basis for the search for theoretic relationships. The intuition that prompted the search for a unifying structure in terms of which the mathematical and chemical properties of the elements could be organized and displayed was sustained in the light of countervailing empirical evidence. Making sense of this requires a more flexible logic, one that permits of a temporary focus on a subset of the properties and relations within of a model while sustaining the set of models deemed adequate in the larger sense exhibited by the connections among models in a unifying theoretical structure. And as the century progressed the search for such a structure began to bear fruit.

By the turn of the century the core intuition, combining chemical affinities and mathematical measurements resulted in a number of proposals that pointed towards the Periodic Table. John Newland introduced the idea of structural level with his ‘law of octaves’, the geologist, Alexander De Chancourtois, and chemists William Odling and Gustavus Hinrichs offered structural accounts of know elements. All this culminated in the work of Lothar Meyer and most famously Dimitri Mendeleev who are credited as the key progenitors of the periodic table. The proliferation of structured arrays of models reflected the key epistemic property I call ‘model chain progressive’ (technical appendix, Part I, 1.3). That is model chains were themselves being linked in an expanding array such that the set of model chains was itself increasing both in number and in empirical adequacy. The culmination was a series of publications by Mendeleev beginning in 1869, which codified and refined the Periodic Table in various editions of his textbook, The Principles of Chemistry, which by 1891 was available in French, German and English.

Mendeleev encapsulated his findings in eight points:

‘1: The elements if arranged according to their atomic weights, exhibit periodicity of properties

2. Elements which are similar as regards their chemical properties have atomic weights, which are either of nearly the same values…

3. The arrangements of the elements, or of groups of elements, the order of their atomic weights corresponds to their so-called valences…

4. The elements which are most widely diffused have small atomic weights.

5. The magnitude of the atomic weight determines the character of the elements, just as the magnitude of the molecule determines the character of the compound body.

6. We must expect the discovery of many yet unknown elements, for example elements analogous to aluminium and silicon whose weights should be between 65 and 71.

7. The atomic weight of an element may be sometimes be amended by a knowledge of those contiguous elements…

8. Certain characteristic properties of the elements can be foretold from their atomic weights’ (all italics original, pp. 109-110).

As is well known Mendeleev’s conjectures led to a number of compelling predications of unknown elements based on gaps in the table (item 6). This is generally thought to be the most significant factor in its acceptance. Scerri maintains, and I concur, that of equal importance was the accommodations to accepted data that the system afforded (item 7). A major contribution is the correction of atomic weights due to the realization of the importance of valence (item 3). Atomic weight was not identical with equivalent weight only but rather reflected the product of equivalent weight and valence (p. 126). This was reflected by the increase in accuracy as the power of the notion of period in guiding subsequent empirical research proved invaluable (item 1) as well as in the emerging connections between chemical and mathematical properties (items 2, 5 and 8). Even more important to the development of physical chemistry was the effect of the system on later developments in the microphysics, which developed, in part, as an explanatory platform upon which the table could stand. These are all powerful considerations in accounting for the general acceptance of the periodic table in the 20th century. The last of these, indicated almost in passing in item 4, points us back to the ultimate reinterpretation and vindication of Prout’s hypotheses. For it is hydrogen with an atomic weight of 1.00794 that that moves us to the next stage in my model, the role of reduction as the harbinger of truth in science.

Beginning with the discovery of the electron by J.J. Thompson in 1897, the early decades of the 20th century showed enormous progress in the elaboration and understanding of the nature of atoms. Ernest Rutherford, Wilhelm Rontgen, Henri Poincare, Henri Becquerel, Marie Curie, Anton van den Broek, Alfred Mayer and Henry Moseley all contributed empirical and theoretical insights that led of a deeper understanding of atomic structure and its relation to the chemical and mathematical properties of the known elements as well as the discovery of additional elements all within the structure that the periodic table provided.

The availability of a micro theory that explained and predicted made the periodic table available for reduction. That is, the chemical elements could be reinterpreted in terms of a theoretic domain of objects based on the developing notions of the atom and especially of the electron (technical appendix, Part I, 2). Early accounts of the elements in terms of electron configurations where constructed by Gilbert Lewis, Irving Langmuir, Charles Bury and John Main Smith. That is to say, the micro theory became reduction progressive (technical appendix, Part I, 2.1). All of these early efforts were the objects of contention and none was adequate to available empirical evidence, but the power of the theoretic idea prevailed despite empirical difficulties and despite the lack of a firm grounding in a clear theoretic account of the underlying physics. This was to be changed by the seminal work of Neil Bohr and Max Plank along with many others including most notably Wolfgang Pauli, which led to quantum mechanics based on the matrix mathematics of Werner Heisenberg, the empirical and theoretical work of Douglass Hartree and Vladimer Fock and the essential work of Louis de Broglie, Erwin Schrodinger and Wolfgang Pauling. In my terms the periodic table had become reduction chain progressive (technical appendix, Part I, 2 .2.). That is the elaboration of the underlying theory was itself becoming in increasingly adequate both in terms of its empirical yield as reflected in better measurements and in a more comprehensive understanding of the phenomena that it reduced, that is, the chemical and mathematical properties identified in the Periodic Table. And as always the theoretic advance was in the face of empirical difficulties. At no time in the development of quantum theory was there an easy accommodation between empirical fact and theoretic coherence. The various theories all worked against anomalous facts and theoretic inconsistencies. And although this was the subject of the ongoing debate the larger issue was driven by the coherence of the project as evidenced by the increasing availability of partially adequate models and intellectually satisfying accounts that initiated the enormous increase of chemical knowledge that characterizes the last century.

The power of the periodic table was not fully displayed until the reduction to a reasonably clear micro theory led to the enormous increase in breadth that characterized the chemical explanations for the vast array of substances and processes ranging from the electro-chemistry of the cell, to crystallography, from transistors to cosmology. This is indicated in my model by the notion of a branching reducer (technical appendix, Part I, 2.3). It is simple fact, although seemingly hyperbolic, that the entire mastery of the physical world evidenced by the breadth of practical applications in modern times rests on the periodic table. That is quantum physics through its application to the periodic table is a progressively branching reducer (technical appendix, Part I, 2.4).

But the scope of the periodic table, resting upon an increasingly elaborate microphysics is still not the whole theory. For quantum mechanics itself has been deepened with the increasingly profound theories of particle physics. This is an area of deep theoretical and even philosophical contention and so it is possible, although extremely unlikely, that the whole apparatus could collapse. But this would require that a new and more adequate microphysics be invented that could replace the total array of integrated physical science with an equally effective alternative. Such a daunting prospect is what underlies my gloss on truth seen as the very best that we can hope for.

4. Technical Appendix

Part I:

1. A scientific structure, TT = <T, FF, RR> (physical chemistry is the paradigmatic example) where T is a set of sentences that constitute the linguistic statement of TT closed under some appropriate consequence relation and where FF is a set of functions F, such that for each F in FF, there is a map f in F, such that f(T) = m, for some model or near model of T. And where RR is a field of sets of representing functions, R, such that for all R in RR and every r in R, there is some theory T* and r represents T in T*, in respect of some subset of T.

A scientific structure is first of all, a set of nomic generalizations, the theoretic commitments of the members of the field in respect of a given body of inquiry. We then include distinguishable sets of possible models (or appropriately approximate models) and a set of reducing theories (or near reducers). What we will be interested in is a realization of TT, that is to say a triple <T, F, R> where F and R represent choices from FF and RR, respectively. What we look at is the history of realizations, that is an ordered n-tuple: <<T,F1,R1>,…,<T,Fn,Rn>> ordered in time. The claim is that the adequacy of TT as a scientific structure is a complex function of the set of realizations.

1.1. Let T’ be a subtheory of T in the sense that T’ is the restriction of the relational symbols of T to some sub-set of these. Let f’ be subset of some f in F, in some realization of TT. Let <T’1,…,T’n> be an ordered n-tuple such that for each i,j (i<j,) T’i reflects a subset of T modeled under some f’ at some time earlier than T’j. We say the T is model progressive under f’ iff:

a) T’k is identical to T for all indices k, or

b) the ordered n-tuple <T’1,…,T’n> is well ordered in time by the subset relation. That is to say, for each T’i, T’j in <T’1,…T’n> (i2), if T’i is earlier in time than T’j, T’i is a proper subset of T’j.

1. 2 We define a model chain C, for theory, T, as an ordered n-tuple <m1,…,mn>, such that for each mi in the chain mi = <di, fi,> for some domain di, and assignment function fi, and where for each di and dj in any mi, di = dj; and where for each i and j (i<j), mi is an earlier realization (in time) of T then mj.

Let M be an intended model of T, making sure that f(T) = M for some f in F ( for some realization <T, F, R>) and T is model progressive under f. We then say that C is a progressive model chain iff:

a) for every mi in C, mi is isomorphic to M, or

b) there is an ordering of models in C such that for most pairs mi, mj (j > i) in C, mj is a nearer isomorph to M than mi.

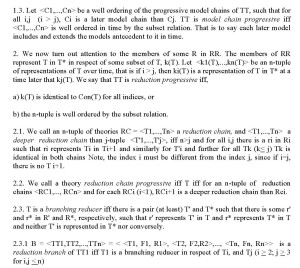

This last condition is an idealization, as are all similar conditions that follow. We cannot assume that all theoretic advances are progressive. Frequently, theories move backwards without being, thereby, rejected. We are looking for a preponderance of evidence or where possible, a statistic. Nor can we define this a priori. What counts as an advance is a judgment in respect of a particular enterprise over time best made pragmatically by members of the field (To avoid browserproblems figure 1 shows part of the scheme 1.3 – 2.3.1).

2.4. We say that a branching reducer , T is a progressively branching reducer iff the n-tuple of reduction branches <B1,…,Bn> is well ordered in time by the subset relation, that is, for each pair i,j (i>j) Bi is a later branch than Bj, that is, the number of branching reducers has been increasing in breadth as inquiry persists.

Part II:

The core construction is where a theory T is confronted with a counterexample, a specific model of a data set inconsistent with T. The interesting case is where T has prima facie credibility, that is, where T is at least model progressive, that is, is increasingly confirmed over time (Part I, 1).

A. The basic notion is that a model, cm, is a confirming model of theory T in TT, a model of data, of some experimental set-up or a set of systematic observations interpreted in light of the prevailing theory that warrants the data being used. And where

1. cm. is either a model of T or

2. cm is an approximation to a model of T and is the nth member of a sequence of models ordered in time and T is model progressive (1.1).

B. A model interpretable in T, but not a confirming model of T is an anomalous model.

The definitions of warrant strength from the previous section reflect a natural hierarchy of theoretic embeddedness: model progressive, (1.1), model chain progressive (1.3) reduction progressive (2), reduction chain progressive (2.2), branching reducers (2.3) and progressively branching reducers (2.4). A/O opposition varies with the strength of the theory. So, if T is merely model progressive, an anomalous model is type-1 anomalous, if in addition, model chain progressive, type-2 anomalous etc. up to type-6 anomalous for theories that are progressively branching reducers.

P1. The strength of the anomaly is inversely proportional to dialectical resistance, that is, counter-evidence afforded by an anomaly will be considered as a refutation of T as a function of strength of T in relation to TT. In terms of dialectical obligation, a claimant is dialectically responsible to account for type 1 anomalies or reject T and less so as the type of the anomalies increases.

P2: Strength of an anomaly is directly proportional to dialectical advantage, that is, the anomalous evidence will be considered as refuting as a function of the power of the explanatory structure within which it sits.

P*: The dialectical use of refutation is rational to the extent that it is an additive function of P1 and P2

REFERENCES

Johnson, R.H. (2000). Manifest Rationality. Mahwah, NJ: Lawrence Erlbaum.

Scerri, E.R. (2007). The Periodic Table: Its Story and Its Significance. New York: Oxford University Press.

Weinstein, M. (1999). Truth in argument. In F.H. van Eemeren, R. Grootendorst, J.A. Blair, & C.A. Willard (Eds). Proceedings of the Fourth Conference of the International Society of the Study of Argumentation (pp. 168-171). Amsterdam: Sic Sat.

Weinstein, M. (2002). Exemplifying an internal realist theory of truth, Philosophica, 69 (1), 11-40.

Weinstein, M. (2006). A metamathematical extension of the Toulmin agenda. In D. Hitchcock & B. Verheij (Eds.), Arguing on the Toulmin Model: New Essays on Argument Analysis and Evaluation (pp. 49-69). Dordrecht, The Netherland: Springer.

Weinstein, M (2006a). Three naturalistic accounts of the epistemology of argument. Informal Logic, 26 (1), 63-89.

Weinstein M. (2007). A perspective on truth. In H. Hansen & R. Pinto. (Eds.), Reason Reclaimed (pp. 91-106). Newport News, VA: Vale Press.

Weinstein, M. (2007a). Between the two images: Reconciling the scientific and manifest images. Proceedings of the Ontario Society for the Study of Argument Conference: Dissensus & the Search for Common Ground. (ISBN 978-0-9683461-5-0).

Weinstein, M. (2009). A metamathematical model of emerging truth. In J-Y. Béziau & A. Costa-Leite (Eds.), Dimensions of Logical Concepts, Coleção CLE, vol. 55 (pp. 49-64). Campinas, Brazil.